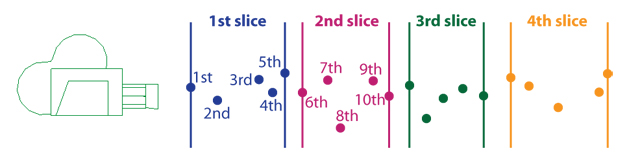

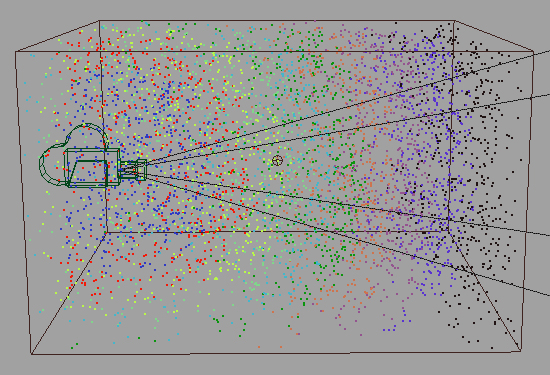

In my previous project Cloud, I did RiPoints rendering using Renderman DSO primitive. I could render around 3 million points with motion blur and deep shadow, but after then I read one article that says Sony Image Works rendered 500 million points for the water in Surfs Up. As I understood, they made slices that have certain amount of particles through a camera direction and rendered them separately and combined them in 2D. It make sense because it is impossible to have a computer that has unlimited memory for 500 millions particles, but at least we could have enough number of render nodes in a render farm.

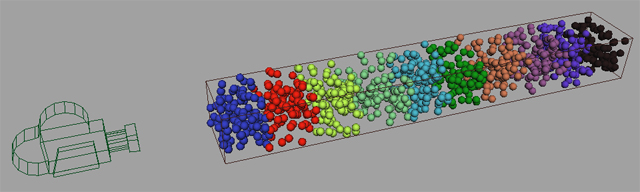

So, I decided to try this slice rendering solution in this quarter. This can be very useful not only for RiPoints but I’ll make it also for external archives, so it can be used for something like crowd rendering.

Before start, I needed to check my limitations.

1. 10 weeks for development, documentation, and applying it in an actual shot.

2. 1GB render farm space for the project folder

3. 2 Hours render time per frame

4. Unable to install any custom plug-ins in the farm

And so far, what I think important factors are

1. The amount of particles

2. Distribution of second particles.

3. Simplification of usage

First, I tried slicing guide particles trough a camera direction. I had to decide where the slicing happens. Should it happen in Renderman DSO or Maya UI? I went to Maya side first because it is very important to keep all process fast especially for this project. To make slices that have exactly same amount of particles, the particles have to know the distance order from the render camera, and to make correct order, I need to do one of the most expensive processing which is a sorting. If I do sorting in DSO side with already expanded number of particles, I won’t be able to see the result, but if I sort them before I expand them, it can be a pretty doable process.

I used Quick Sort which is known as the fastest sorting algorithm.

I tested how Quick Sort can be faster then just comparing every element in the array in below link.

http://math.hws.edu/TMCM/java/xSortLab/

| Bubble Sort | Quick Sort |

|

|

Bubble Sort applied to 1 array containing 100000 items: |

Quick Sort applied to 1 array containing 100000 items: |

The next problem I didn’t expect was this.

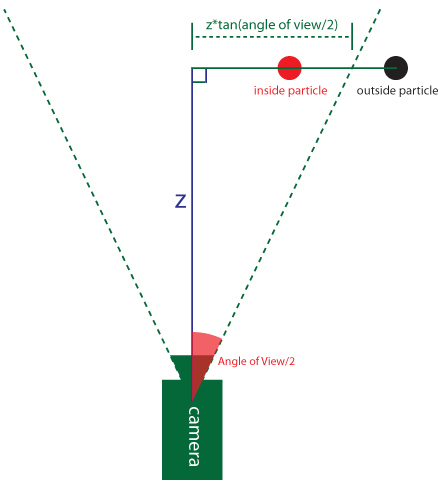

Although I use Quick Sort, sorting is still expensive process, and I don’t want to do it with the particles that I won’t use, so I made camera space culling function. If the camera is in the origin, culling is pretty simple.

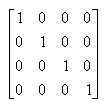

However, of course camera is not in origin always, so I needed to make space transform function using 4*4 matrix which is another way to describe object transform in 3D space instead of using Translation, Rotation and Scale.

This is the identity matrix which represents translation 0 0 0 Rotation 0 0 0 Scale 1 1 1 which means origin.

Also I needed to find inverse matrix of camera transform. If the camera transform matrix is multiplied by inverse matrix, the matrix becomes the identity matrix, which means if I multiply particles matrix to the camera transform’s inverse matrix, I can get the camera space particle position, so I can use only particles that the camera sees.

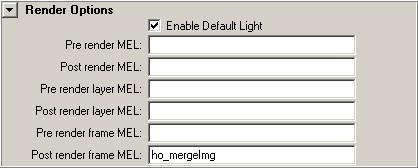

Another thing that I tried at this time is a render time compositing. Every frame can make pretty many image files because for example, to render 100 million particles, I need to make 50 slices, and each slice contains 2milion particles, which means each frame will generate 50 image files. Not just because I don’t have enough space in the render farm, but also I don’t want to deal with such a huge amount of files by hand.

I thought if I use an existing image as a background image of new rendered image, I don’t need to have many images at any time. In other word, every rendered slice image can use the slice image behind the slice as a background image.

I found Renderman’s Display merge option in the Pixar Document, but for some reason, it doesn’t work, and I couldn’t find the reason, so I used tiffcomp.exe which can be found in the folder where the prman.exe is.

This mel script runs tiffcomp.exe using system() command after every frame rendering done.

This is the test batch rendered sequence. For now, I tried this with multiple frame sequence, but later I will do this in a single frame with multiple slice images.