I went to the second approach, but finally I combined two ideas.

Mushroom Cloud

Simulation : FumeFx, Rendering : Renderman

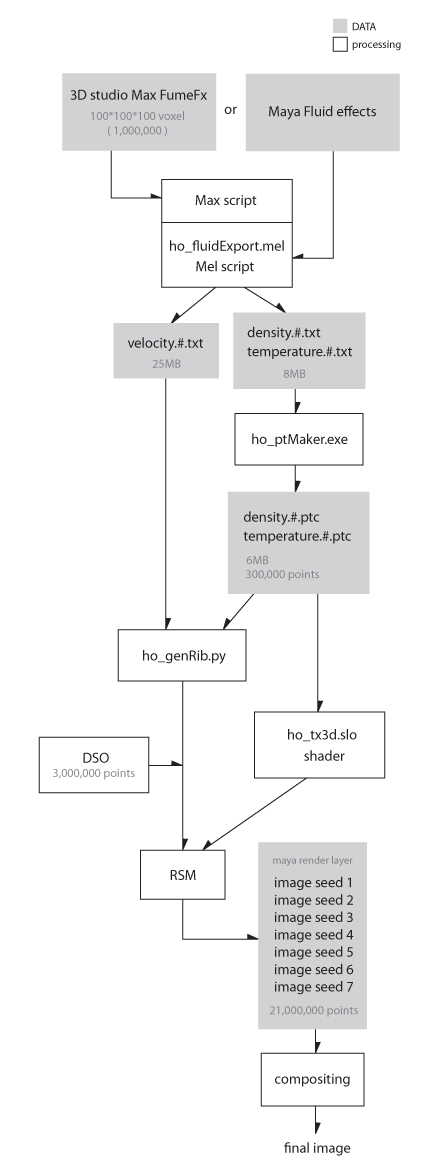

The key idea to use a voxel data in Renderman is point cloud. In my previous project, I found it is possible to use 3D texture such as point cloud or brick map for volume shader. I thought If I could bake a voxel density data out to point cloud or brick map, I could use it as a opacity value for a volume shader, and I found both Maya fluid effects and 3D Max FumeFx provide mel and max script command to access to the data of each voxel.

Mel command : getFluidAttr -at "density" -xi 1 -yi 2 -zi 3;

Max script : getSmoke(i,j,k);

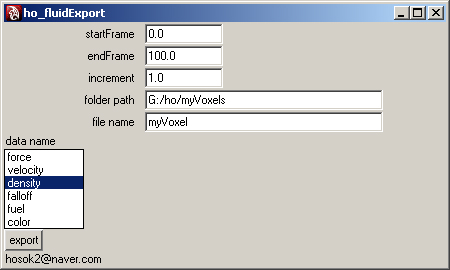

First, I exported smoke data from FumeFx as a text file which includes data name, data type, voxel resolution, bounding box information and smoke value, which is same as density. This is the max script. Since FumeFx is still early version, there are some limitations of using a script. I can run FumeFx script only through the FumeFx interface, which means exporting a text file is only done when it does simulation. It was pretty serious problem because sometime writing a file could take more time then the actual simulation time. Especially for motion blur, I baked velocity value which is vector data, and voxel resolution was 200*200*200. The file size for each frame was up to 600MB and saving the file took pretty long(20 minutes...), and if I didn't like the simulation, I had to do both simulation and exporting again. I think that using a binary file type could save some time, which will be my future work.

For Maya, I wrote this script.

To make point cloud file from the text file, I wrote a command line software (ho_ptcMaker.exe) that uses the point cloud API. Renderman point cloud API has pretty simple functions but still good enough. My ptcMaker reads the text file that I exported from 3DMax or Maya and writes points into point cloud file (*.ptc) file, so each point represents each voxel. This is the C++ code.

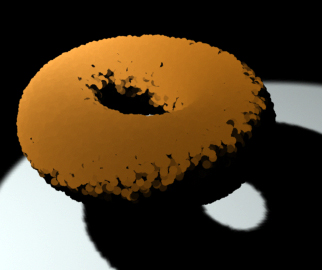

I tried to convert the point cloud file to brick map, but I found a brick map has some problem. It looses some data. For example, 10 points in point cloud file should become 10 voxels in brick map, but they become less then 10 voxels. So, I decided to use only a point cloud, which is pretty doable. 100 * 100 * 100 point cloud file size is around 6MB. Actually my 100 * 100 * 100 voxel container doesn't contain 1,000,000 points. I deleted voxels that has density value less then 0.0001, so approximately 300,000 points are in each ptc file.

Next, I tried to use volume shader that gets density data from the point cloud file as a opacity value using Ray Marching. It seemed to be fine, but when I implemented a self shadow, I found render time was forever, and there was no benefit to use Renderman instead of Maya renderer or FumeFx volume shader.

So, I went back to first idea which is using RiPoints. First, I tried RiRunprogram procedure and python helper program that I wrote for the previous project. However, at this time, I was calling python script at each voxel position which means I was running the script 300,000 times. I tried to render it out and it also took forever. The problem is that the python script and renderer communicate through stdin and stdout which is a kind of bottleneck, so I started to write DSO procedural primitive using RSL plug-in API. There are two types of DSO for Renderman. One is for shader and the other one is a procedural primitive. My primitive gets a seed value from rib and generates several points, so 300,000 points could be converted to 3,000,000 points in render time which is not only fast but also memory efficient. This is the DSO C++ code.

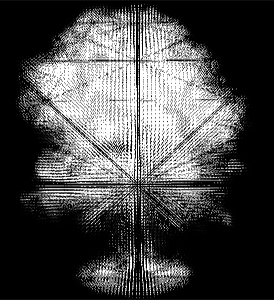

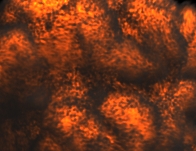

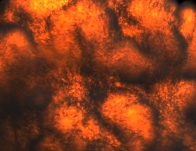

Rendering RiPoints has another benefit which is a self shadow. RiPoints has normal value, which means I can assign a surface shader that has a diffuse attribute. With deep shadow, I could get this result.

Another python script generates rib procedure in render time. This script includes RiProceduals “DynamicLoad” at each voxel position and also implements motion blur using velocity data from the text file. Originally I wrote this script to generate rib file to a disk, but later I changed it to print rib procedure directly to renderer. There are two reason. The first reason was that I have a limited project space in my school render farm (1G), and each rib archive was at least more then 100MB for 1 frame. The other reason was that I needed to render same frame with different seed value, so I could reduce point-like feeling by mixing multiple rendered images. To do so, I needed to generate rib archive dynamically.

For motion blur, I used the velocity data captured from voxel simulation.

outStr += "MotionBegin [0 1]\n"

outStr += " ConcatTransform [ 1 0 0 0 0 1 0 0 0 0 1 0 0 0 0 1 ]\n"

outStr += " ConcatTransform [ 1 0 0 0 0 1 0 0 0 0 1 0 %s %s %s 1 ]\n" % (x,y,z)

outStr += "MotionEnd\n"

Finally I wrote a surface shader that reads sequence of density and temperature point cloud file and makes them opacity and color.

Approximately 3,000,000 semi-transparent points with deep shadow and motion blur were maximum in 8G memory Linux machine at this time. Render time for each frame was usually about 7 minutes, and I used 7 render layer in Maya with different seed value, so render time for each frame was less then 1 hour, so it has similar look of 21,000,000 points.

|

|

|

3,000,000 points |

3,000,000 points * 7 |

To be honest, I'm not sure if RiPoints is a good solution for cloud rendering because sprite or volume shader could be a better solution, and I couldn't completly get rid of point-like feeling. However, at least I found many potential possibilities of RiPoints such as sand or snow. Also, pointfalloff Attribute, which makes soft edge points, has been added in Renderman 13.5 even though I didn't use it for this project because school render farm doesn't support it yet. It was also a good experience of writing my first C++ software. William helped me a lot about Visual Studio setting and C++ compiling.

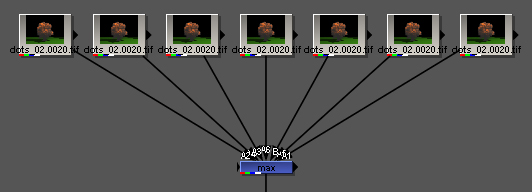

Since the process was pretty complicated, I made this flow chart.